[vc_row][vc_column css=”.vc_custom_1700553037018{background-color: #efefef !important;}”][vc_column_text css=”.vc_custom_1701162815677{margin-top: 0px !important;margin-right: 10px !important;margin-bottom: 30px !important;margin-left: 10px !important;}”]

The notion of Omnichannel is now a given. Customers must be able to complete the purchasing act, whatever the channel or medium. The digitization of all information and instant communication are essential to achieving this goal. This has major repercussions on IT and its organization, as well as on data processing methods.

[/vc_column_text][/vc_column][/vc_row][vc_row css=”.vc_custom_1701097008010{margin-top: 40px !important;}”][vc_column][vc_column_text]

Data processing

First of all, to avoid offending our IT contacts, we need to clarify what we mean by real time.

Real time doesn't exist

Simply because Datais transported, processed and integrated. The time required for these operations can vary from milliseconds to minutes, or even hours for integration operations.

We will therefore adopt the notion of water flow "to evoke Data management. This imaginary notion is based on event-driven processing. In other words, processing is triggered by an identified event.

For example:

- when a business application is asked to generate and deposit a data file, it's an event.

- we detect that a user has launched a query - this is an event.

This is the immediacy of data processing once it has been created, as opposed to "batch" processing, which collects a large amount of data and puts it on hold before organizing, transferring, processing and integrating it. This implies a time delay that systematically prevents the immediacy we dream of.

Upstream and downstream of the data value chain: a focus on business processes

The point of sale generates sales tickets on the fly. These sales can be generated by payment terminals, mobile tools, kiosks or virtual checkouts. These sales need to be processed and passed on to the back office, so as to enable sales and taxes to be reported, stock sold (and therefore outgoing) to be declared, margins to be calculated, bank payments to be tracked, customerpurchasing behavior to be analyzed (whether the customer is a member or not), sales prices to be correctly applied to the consumer and promotional campaigns to be carried out ...

Many retailers still process this information at the end of the day (or period), after the close. Today, however, this need has evolved in the GSA and GSS (large food retailers and specialist retailers ), with the development of the web and theomnichannel approach.

Today, sales data is processed on an ongoing basis and fed back to central systems (ERP, CLOUD, DATAHUB, etc.). What's more, this information is also shared immediately with the entire ecosystem of business applications.

These developments have had a direct impact on the entire IT organization. Applications, workflows and their processing had to evolve. In concrete terms, the entire architecture of the information system had to adapt.

Let's take the case of available stock management:

The "sale of a part" event, whether at a point of sale, in a drive-thru or on an e-commerce site, must be immediately shared with the upstream, web and other points of sale of the brand. The operations and tasks arising from this event enable the company's employees and customers to be informed immediately, particularly on the following points:

- update stock at the point of sale to trigger automatic restocking,

- the e-commerce site needs to update stocks for customers using click & collect or e-reservation, to direct them to the right point of sale (or sales channel),

- the Drive that shares its stock with the supermarket!

We understand that all business processes are impacted.

Supply, logistics, marketing, customer relations... all will have to adapt and enrich their processes to work with data that will evolve very quickly throughout the day.

We could also have mentioned :

- Business Intelligence: all universes and their performance indicators will have to be modified,

- customer communication: the launch of targeted communication campaigns will be more Time to Market and more relevant,

- marketing: the results of a promotional campaign will be known immediately,

- logistics: procurement will be triggered immediately, enabling greater agility in planning deliveries.

In short, "on-the-fly" information processing means that information relevant to decision-making is available without delay. For both the customer and the company.

[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column][vc_column_text]

How can IT implement an application architecture adapted to "real-time" data processing?

We understand that traditional database systems and workflow architecture are no longer able to meet the demands imposed by Big Data or streaming data. Compared to batch mode, "real time" will encompass all data processing requiring short calculation times or a strong need for information.

For example: updating the repository, descending promotions, reporting sales or stock levels...

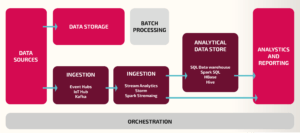

Here, the results of data processing will therefore be said to be "hot" for real-time versus "cold" for batch processing. The impact is structural and requires the creation of a so-called Lambda architecture.

The "Lambda" architecture is a superposition of layers: we keep a "batch" layer, and add a "real-time" layer, and a "services" layer, i.e. :

- the "batch" layer will handle larger data flows requiring longer processing times (e.g. repository initialization),

- the "real-time" layer (Speed Layer) will process data as it happens. Less voluminous, but in a constant, regular flow (e.g.: till receipts),

- the "services" layer stores, integrates, aggregates and displays data (ETL, ERP, DATAHUB, etc.).

To sum up, the "Lambda" architecture essentially consists of setting up two processes within the same data analysis system:

To sum up, the "Lambda" architecture essentially consists of setting up two processes within the same data analysis system:

- a process that enables data to be processed in batch mode

- a process to analyze data in real time (as it happens)

This architecture is made possible by KAFKA technology. Apache Kafka is an open source application for data flow processing and message queuing, capable of processing up to several million messages per second from different producers, and routing them to multiple consumers. Other solutions exist to meet these needs (via an API, for example, but that's another story).

[/vc_column_text][/vc_column][/vc_row][vc_row css=”.vc_custom_1701100087312{background-color: #ffffff !important;}”][vc_column css=”.vc_custom_1701100121202{background-color: #ffffff !important;}”][vc_column_text css=”.vc_custom_1701100095029{margin-top: 20px !important;margin-right: 10px !important;margin-bottom: 20px !important;margin-left: 10px !important;background-color: #ffffff !important;}”]

As you can see, it's the whole architecture, and even the organization, of the IS that is impacted.

The need to share information obliges us to upgrade our systems to this data flow processing solution. Because it meets business needs and future challenges.

At Univers Retail, our mission is to take a step back to understand and grasp the changes needed to implement them. This means working with business teams to support them in implementing projects, solutions and processes. It's also important to support them in the day-to-day changes required to realize the promise of real time.

[/vc_column_text][/vc_column][/vc_row][vc_row css=”.vc_custom_1682523226414{padding-bottom: 15px !important;background-color: #efefef !important;}”][vc_column][vc_column_text]

Do you have a Data-related project or or would you like to talk to one of our experts?

[/vc_column_text][rt_button_style title="Contact us" link="url:https%3A%2F%2Fwww.universretail.com%2Fcontact%2F|title:Contact" animation="fadeInUp"][/vc_column][/vc_row]